[ --------- 40% ]

Status: Stopped

Reason: Very difficult to treat all edge cases (students grades) fairly

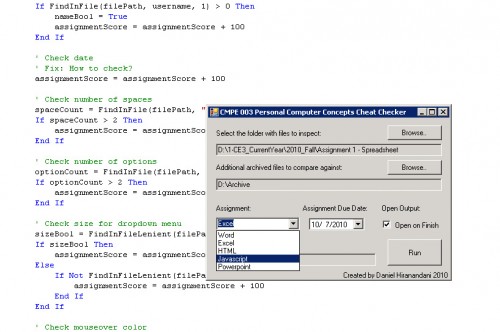

Using the Microsoft Office Cheat Checker application as a base, I wanted to add in automatic grading for two of the class’ assignments: HTML and Javascript. These assignments have the students modifying base HTML and Javascript files to teach them fundamental modifications such as creating hyperlinks, changing font sizes and colors, adding If/Else If/Else function cases, onMouseOver events, etc., so the changes we expect the students to make are fairly rigidly defined.

This project exploits the fact that each of the assignment requirements can be implemented in a small number of ways, so “grading” can be done by searching for the existence of particular strings, or the absence of particular strings.

Grading for the majority of the students is fairly straight forward, because the majority of the students end up doing 100% of each requirement, or 0% of each requirement, so it’s easy to definitely say they have a 100% match for a term or a 0% match for the term. Grading for the outliers is a bit trickier.

As an example of a simpler outlier case, one of the Javascript assignment requirements is to change the Javascript alert so that instead of saying “Leaving so soon?”, it says “Have a nice day!”. It’d be trivial to search for “Have a nice day!”, but what if a student gets more creative than that and puts “See ya!” instead? We could search the document to see if it doesn’t contain “Leaving so soon?”, but that gives credit to the person who deletes the alert entirely.

A great way to solve this problem is to use regular expressions for pattern matching. Now, we can search for alert\(\"(.*)\"\);, and check to make sure the stuff inside the alert message is not “Leaving so soon?”. As powerful as this is, it still doesn’t catch the case where someone simply adds a space in the message, or changes a single character, but that at least means the person saw the alert and changed it.

The two big challenges (and coincidentally the two ultimate hang-ups) are dealing with partial credit, and checking that the code actually works. The student might have included all of the assignment requirements, but what if their page doesn’t do what it’s supposed to when the Submit button is pressed, or if they forgot a less-than symbol or semicolon and the pattern we’re searching for doesn’t match? When I manually grade assignments, I can peek at the code to see how severe their mistake was and give partial credit based on how close they were, but that is very difficult to do, and especially to do fairly.

In developing this grader application, I spent quite a bit of time checking through students’ assignments to ensure that their files were graded fairly, and I found that there was a significant chance that I would run into the partial grade situation without good resolve. I think it could work with enough tests to cover all of the edge cases, but until then, I wouldn’t feel right assigning a lower grade to a student than they deserved because my program didn’t catch their tricky implementation.